Computational Analysis of Applied Weighted Voting Games

project Sonata 2015/19/D/ST6/03113, funded by National Science Center, Poland

implemented @ MIM University of Warsaw, 2016-2019.

project Sonata 2015/19/D/ST6/03113, funded by National Science Center, Poland

implemented @ MIM University of Warsaw, 2016-2019.

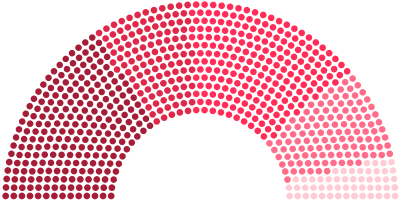

With numerous parties in the parliament it is not easy to assess the power of each one of them. Typically, a party with more than a half of all seats obviously has all the power as it can pass any bill. Losing the majority even by a single vote changes the situation diametrically - decisions have to be made by coalitions, and the power becomes a complex function of the number of seats held by each party.

But mathematicians have a special tool designed exactly for such analysis - weighted voting games. In this very simple model each player (in our example representing a political party) has a number of votes and a decision is made if the number of players' votes in support of it exceeds a certain threshold. From the model, there is only one step to calculate the power - to this end, power indices has been proposed, that consider how often each player could provide the swing votes that would convert the losing side into the winning side.

As most theoretical models, weighted voting games are based on various simplifying assumptions. In particular, the model assumes that any player is always ready to cooperate with all others. In reality, however, this is usually not the case, due to sympathies and antipathies between political parties.

The key objective of our project is to propose a more realistic model of weighted voting games which addresses the aforementioned problems and to develop as efficient as possible techniques to calculate power indices within this model.

At the heart of multi-agent systems is the ability to cooperate in order to improve the performance of individual agents and/or the system as a whole. While a widespread assumption in the literature is that such cooperation is essentially unrestricted, in many realistic settings this assumption does not hold. A highly-influential approach for modelling such scenarios are graph-restricted games introduced by Myerson. In this approach, agents are represented by nodes in a graph, edges represent communication channels, and a group can generate an arbitrary value only if there exists a direct or indirect communication channel between every pair of agents within the group. Two fundamental solution concepts that were proposed for such games are the Myerson value and the Shapley value. While an algorithm has been developed to compute the Shapley value in arbitrary graph-restricted games, no such general-purpose algorithm has been developed for the Myerson value to date. With this in mind, we set to develop for such games a general-purpose algorithm to compute the Myerson value, and a more efficient algorithm to compute the Shapley value.

Myerson's graph-restricted games are a well-known formalism for modeling cooperation that is subject to restrictions. (...) Calvo et al. generalized these results by considering probabilistic graphs in which agents can cooperate via links only to some extent, i.e., with some probability. In this paper, we propose the first algorithm for the Calvo et al.'s generalised model. Our algorithm is based on the enumeration of all connected subgraphs in the graph. As a sample application of the new algorithm, we consider the probabilistic graph that represents likelihood of pairwise collaboration between political parties before the 2015 General Elections in the UK.

Assessing the progress made in AI and contributions to the state of the art is of major concern to the community. Recently, Frechette et al. [2016] advocated performing such analysis via the Shapley value, a concept from coalitional game theory. In this paper, we argue that while this general idea is sound, it unfairly penalizes older algorithms that advanced the state of the art when introduced, but were then outperformed by modern counterparts. Driven by this observation, we introduce the temporal Shapley value, a measure that addresses this problem while maintaining the desirable properties of the (classical) Shapley value. We use the tempo- ral Shapley value to analyze the progress made in (i) the different versions of the Quicksort algorithm; (ii) the annual SAT competitions 2007–2014; (iii) an annual competition of Constraint Programming, namely the MiniZinc challenge 2014–2016. Our analysis reveals novel insights into the development made in these important areas of research over time.